Over the past few days, I’ve received a number of clever holiday and Lean-themed videos, maps, and stories that I thought I’d share. I’m sure[…]

When you think of value stream transformation, what are the most common desired outcomes that come to mind? Shorter lead times? Higher quality? Reduced expenses?[…]

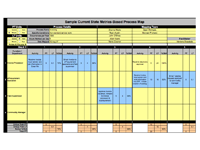

Any leader or skilled improvement professional knows that metrics are necessary to define what success looks like, measure progress toward a defined target, and assess[…]

In 2012, I spoke at the Lean HR Summit, which is one of the many excellent annual Summits delivered by Lean Frontiers. I had heard about[…]

One thing that there’s no shortage of in life is criticism about things we don’t fully understand—or haven’t experienced success with. Another thing there’s no[…]

As many of you know, I’m a rabid fan of clarity (along with focus, discipline, and engagement, the foundational organizational behaviors that I address in[…]

Mike Osterling and I decided to write our latest book, Value Stream Mapping, to deepen people’s understanding about this powerful improvement methodology. In particular, we wanted[…]

One of the joys of writing a book is interacting with readers. Many authors experience forehead-thumping “duh!” moments as they hear questions and realize that they[…]

I love how conversations can challenge one’s thinking and spark new ideas. Interviews—for a new job, a board position, or with the media—are particularly rich[…]

It’s difficult to say when and where the concept of “business” was borne. It’s often attributed to ancient Roman law and to British law in[…]

Friday was a deeply gratifying day. It was one of those joyful days that, as a improvement consultant/coach and change “facilitator,” makes up for those[…]

In The Outstanding Organization, I assert that outstanding organizations operate with high degrees of clarity, focus, discipline, and engagement. In the chapter on clarity, I present the various[…]

Value Stream Mapping has been released. One of the most common interview questions I’ve been getting is: Why this book? Why now? The topic may seem a[…]

In 2009, Inc. magazine listed “value-add” on its list of 15 Business Buzzwords We Don’t Want to Hear. In 2012, “value-add” was again reviled, this[…]

Finally, there’s a glimmer of hope in an industry known for abysmal customer service. I flew on Canada-based WestJet several times this year and found[…]

The holiday season is my favorite time of year for two reasons: the holidays themselves and business planning. Proper business planning requires significant reflection, an activity[…]

I’ve long been a fan of ease, which is a large reason why Lean management has always appealed to me—and why I’m such a fan[…]

Those of you who’ve read my recent book, The Outstanding Organization, know that clarity, focus, discipline, and engagement are the four core behaviors required to[…]

For many months now, I’ve have a rash of random encounters and conversations with people who mention in one form or another that they want[…]

The world lost one of its brightest business leaders this week, Eiji Toyoda. Fortunately, he left behind a legacy of leadership philosophies and practices that[…]

I love Twitter! I come across all kinds of tidbits that get me thinking in new directions.The key is to follow smart people. Case in[…]

One of the things that pains me the most is when I hear people saying they can’t make improvement because “Corporate” won’t allow it or[…]

Today I’m featuring a guest post by Tiffany Mock, a personal productivity consultant. Tiffany shares her take on how to better manage email. In supporting companies’[…]

Yes. It really does. Deployed properly, that is. One of the biggest problems we currently face in the Lean movement is that there are an[…]

After my keynote at the American Society for Quality’s World Conference, I finally caught up with Square Peg Musing’s Scott Rutherford for a podcast interview.[…]

After receiving notification that I won a 2013 Shingo Research Prize for my book, The Outstanding Organization, the Shingo organization asked me to submit a[…]

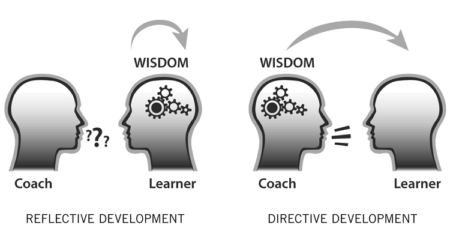

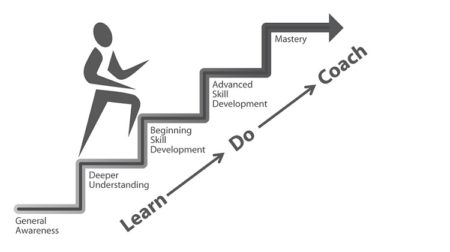

If you’re a leader or an improvement professional, becoming an effective coach is THE capability to be developed and, frankly, most people in these roles[…]

I begin each weekend watching at least one TED Talk. I usually watch them twice: first, to learn and be inspired. Second, to study the[…]

I love learning. Especially when it’s about something I thought I understood already. Enter this gem I received in this morning’s email. This video, produced[…]

Unequivocally, YES. But…there’s more to the story. We don’t throw in the towel if we don’t have all of the preexisting conditions in place. Without[…]

I’m going off-script (pun intended) to share one of my other passions besides operational excellence: the movies. Maybe it came from living for 12 years[…]

We’ve all been there. A boring talk. At a recent conference I keynoted at, I watched as a speaker failed to capture his audience’s attention.[…]

As I explain the Focus chapter in The Outstanding Organization, multitasking is a fallacy—you can only perform one cognitive task at a time. What you actually[…]

When I learned The Outstanding Organization’s publication date, I groaned. The book was slated for release in July 2012, just as summer vacations were getting[…]

One of my New Year’s resolutions is to implement pull in as many places as I can. Early last year I implemented it quite successfully[…]

I frequently receive calls from prospective clients who seek “training” of some sort. The requests include Lean overviews, problem-solving workshops, value stream mapping training, and[…]

The New Year has always been one of my favorite holidays. Not for the revelry and good cheer, although that can be a nice element.[…]

Mike Osterling and my new book, Metrics-Based Process Mapping, appears to be striking a cord with many. Yesterday I held a webinar with the highest[…]

“In the unsexy tedium of daily operations, consistency is key.” This is a line from p. 107 of my new book, The Outstanding Organization. As[…]

Businesses routinely attempt to accomplish too much and quickly lose focus when the next fire erupts or a new shiny ball appears. When I work[…]

As many of you know, I’m a passionate advocate of Lean management practices and the power of Lean in transforming organizations. So I grow weary[…]

A disciplined Lean thinker is always looking to improve his or her own personal efficiency and effectiveness. Lately I’ve had ample opportunity to explore how[…]

It’s been interesting to see what people have picked up on as I’ve been promoting my new book, The Outstanding Organization. My original title was[…]

Earlier this week I learned that my blog posts weren’t being pushed to my subscribers as I thought they had. I was blogging away but[…]

Outstanding organizations—indeed outstanding individuals—are highly proficient in two related skills: prioritization and focus. They don’t flit from one project or goal to the next, in[…]

Wednesday’s post featured a local business that I applaud for their Lean efforts. Great customer service, Lean layout, efficient processes, the whole nine yards. Today’s[…]

…Well, I don’t mean literally. But if you don’t commit firmly to breaking bad habits, replacing them with new ones becomes more difficult. And accepting[…]

One of the hazards of being a performance improvement practitioner and coach is that most of us see “opportunities for improvement” everywhere we go and[…]

New clients nearly always list “greater accountability” on their short list of performance improvement goals. Leaders cite missed project deadlines, finger pointing, and “not my problem”[…]

“What is Lean?” may seem a strange question to be asking 15 years after the approach gained recognition in manufacturing and began its progressive spread to other industries. But[…]

I’ve been thinking about blogging for about five years. A few years ago, a friend who knew I was thinking about it (and has long[…]